Fall 2011—May 2012

Article by Peter Torpey, Ben Bloomberg, Elena Jessop, and Akito van Troyer

The MIT Media Lab collaborated with London-based theater group Punchdrunk to create an online platform connected to their New York City production of Sleep No More. In the live show, masked audience members explore and interact with a rich environment, discovering their own narrative pathways. We developed an online companion world to this real-life experience, through which online participants partner with live audience members to explore the interactive, immersive show together. Pushing the current capabilities of web standards and wireless communications technologies, the system delivered personalized multimedia content allowing each online participant to have a unique experience co-created in real time by his own actions and those of his onsite partner. This project explored original ways of fostering meaningful relationships between online and onsite audience members, enhancing the experiences of both through the affordances that exist only at the intersection of the real and the virtual worlds.

The first version of the extended Sleep No More was offered to a limited public in May 2012. Further elaborations and next steps are currently being evaluated.

Sleep No More is a successful immersive theatrical production created by the innovative British theater group Punchdrunk. Currently running in New York City, the production tells the story of Shakespeare's Macbeth intertwined with Alfred Hitchcock's 1940 film adaptation of Rebecca, based on the Daphne du Maurier novel of the same name. The New York production is set in the fictionally named McKittrick Hotel, a converted warehouse with a set consisting of over 100 cinematically detailed rooms distributed over the seven story space. Audience members are masked upon entering the experience and instructed to explore but not to speak. The audience proceeds to wander through the space as they please and soon discovers actors who, mostly through dance, enact the story over the course of the three-hour performance.

Punchdrunk approached the Media Lab in the fall of 2011 with the challenge of extending their existing production into an online experience. They provided the caveat that video and imagery of the physical experience falls short of capturing the immersive nature of being there and limits the sense of agency afforded to audience members. The augmented experience that the team at the Media Lab created connected individual audience members online with partners from the audience in the physical space. Each pair ended up following a narrative thread from the show that was further developed for this experience. The online participant interacted through a web interface with a predominantly text-based virtual environment that evoked the spaces of the physical show. The text interface was accompanied by an immersive soundscape that streamed to the user's browser, as well as occasional moments of live and pre-recorded video and still imagery. Each onsite audience member participating in the experience was fitted with a specially enhanced version of the Sleep No More mask that had physiological and environmental sensors as well as bone conduction transducers to reproduce audio inside the participants head without obstructing their ears. The sensor data provided a level of communication about the individual's experience to their online counterpart while the transducers allowed for some communication (triggered based on the circumstance or mediated from the online participant through an actress to meet the conceit of the storyline) back to the onsite participant. Physical portals, actuated props, were installed discreetly throughout the physical set to provide moments of greater connection between the online and onsite participants. When the online audience member occupied the virtual portal space while their onsite partner was in the corresponding physical space, the participants could communicate through the portal object. An initial trial of this extended experience was run over five performances in May of 2012.

Project team: Tod Machover, Punchdrunk, Ben Bloomberg, Gershon Dublon, Jason Haas, Elena Jessop, Nicholas Joliat, Brian Mayton, Simone Ovsey, Jie Qi, Eyal Shahar, Peter Torpey, and Akito Van Troyer

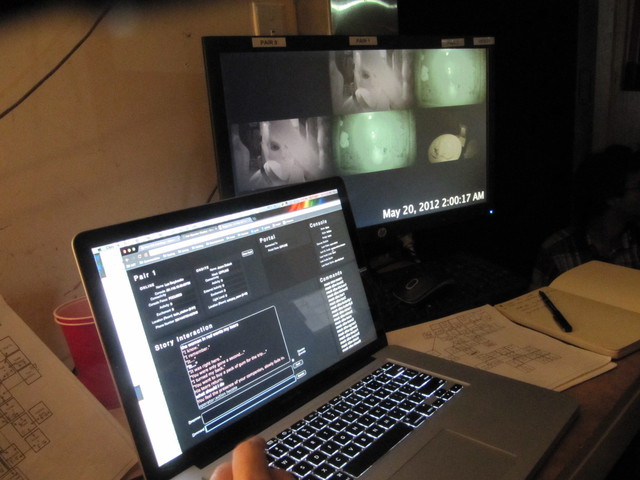

A master story logic system called “Cauldron” controlled all aspects of the performance experience for both online and onsite participants. Its overall system architecture allows the various facets (online participants, onsite masks, media content, portals, operators, and the story) to interact and exchange information with each other via a set of rules and assets (text, media, behaviors) that defines the virtual world, how it intersects with the real-time performance and the physical world, and describes the range of possible actions and responses that the participants could interact with.

Cauldron is essentially a show control system for coupled online-onsite performance. It can trigger events in both the real and virtual world and support many different interactions at once. Each pair of participants were served a unique interactive experience that was flexible in time and in response to their actions, while still adhering to the constraints of the live performance to which they were connected.

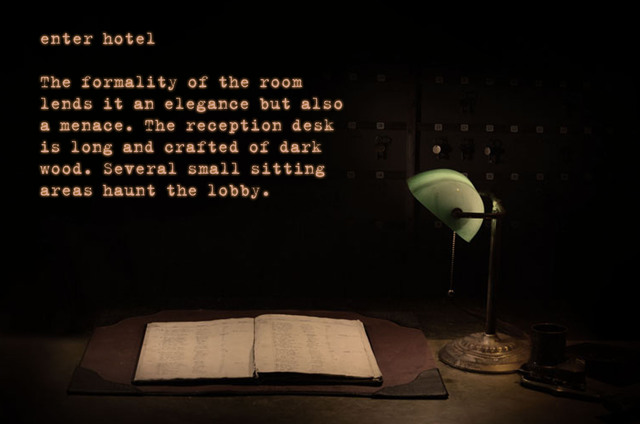

Online, the user interacted with a web-based console which provides, text, images, video and sound depending on the story and how online and onsite participants interacted with the system. The online interface is intended to be viewed full-screen and consists of several layers. The back-most layer is a constant video stream, frequently just black, from a streaming server located onsite. On top of that imagery may be displayed. Images with transparency would allow the video to show through while opaque images would complete occlude any video. The topmost layer is a text component that may be animated and styled in response to story interaction. Text is presented to the user in this layer and the user can respond and interact with the experience by entering their own text. Like the video stream, a high-latency audio stream was also delivered to the browser. Additionally, channels of low-latency audio sprites and audio loops played sounds in immediate response to user interaction.

A companion web interface for control room operators monitored and influenced the interactions online and onsite participants have with Cauldron and the story. The interface allows the operator – a narrative orchestrator – to cover for any gaps in the story content or unexpected input by participants, such that the online participant never would feel stifled by computer-like response. Drawing a parallel between the extended experience and Sleep No More, the role of the operator is something like that of a stage manager, steward, and an actor in the way that the operator can gently guide both the online and onsite participants.

A new narrative thread was devised and embedded into Sleep No More for the Media Lab/Punchdrunk collaboration. Narrative and descriptive content and rule systems were turned into 5000+ line script file in a custom markup language (JEML) that held the descriptions of the spaces in the world, the items in the world, the characters that could be found there, and a large amount of the logic about how online people could interact with different parts of the world and what would happen when they did. A text parsing system supported analyzing a user’s (or operator’s) text-based input to determine a desired action, given pre-programmed knowledge text recognition and story flow.

The components of the media distribution system for Sleep No More consisted of subsystems for video, audio and telephones. Each of these subsystems has similar components:

Together, these components enriched the participant’s experience beyond text and still imagery.

IP cameras placed throughout the Sleep No More space were used to capture live video of the performance. MJPEG encoding was used to retrieve video from these cameras on the internal network, and streams were received by video rendering nodes which handled switching and effects processing of the live video. Each node subscribed to a multicast group to receive control commands from the story logic. Real-time video processing was used on both live and pre-recorded clips before delivering content to each online participant using Flash, H264 and RTMP. Wowza was used to encode video as RTMP so it could be accessed by clients in the web browser.

The audio systems for Sleep No More were based around a virtual streaming and mixing environment running inside Reaper. The audio experience was organized into cues, coded in XML, which were executed by the script engine. Each cue could smoothly alter parameters of effects, inputs and outputs, or play back pre-recorded material. Outputs were streamed in real-time to online participants, and android devices in the space using a combination of Icecast and Wowza streaming servers. Live inputs originated from performer microphones and could also be connected to telephones on the set and at participants’ homes. All content for the experience was encoded binaurally.

Onsite audience members were outfitted with noninvasive wearable computers and sensors, and given masks with special actuators to transmit their presence to the online participants. Each set of wearables was equipped with a microphone, a temperature sensor, a heart rate monitor, an EDA sensor, a Bluetooth location sensor, and a RFID tag to capture an onsite participant’s activities, expressions, and state of mind. Masks were retrofitted with bone conduction headsets to allow operators to send audio messages to onsite participants which kept their ears free to listen to the immersive audio experience within the physical performance space of Sleep No More.

Portals–robotic props imbedded seamlessly into the Sleep No More set to facilitate moments of communication between online and onsite participants–had several modular parts: a system for identifying users and triggering the proxemic interface; an embedded computer running networking and control software to coordinate the interaction with the central server; and the physical portal itself. A general framework was developed for distributed sensing and actuation. Multiple location systems tracked individuals in the space, real-time sensor data was coordinated centrally, and distributed devices were actuated in turn to direct specific users from place to place, convey relevant information, and respond to users’ interactions. At the same time, this system animated a virtual world with dynamic information from the physical environment, allowing online users to peek into the physical world.

Network infrastructure was crucial to the project because it allowed all subsystems to communicate. The Internet for the project was provided on two redundant 50Mb/5Mb Time Warner Cable connections. Online clients were load balanced between the two Internet connections and back-end servers with a Cisco 2900 router connected to a variety of Cisco 3600 and 3700 series switches. An Cisco Aironet 3600 wireless system was installed using a 2500 controller and 24 Cisco 3602 access points throughout the building.

Switches were interconnected using LACP over multiple gigabit links. This allowed end-to-end Gigabit Ethernet and wireless N performance for the clients and servers on the network.

This project is made possible by gracious contributions from Cisco, Intel, Time Warner, and Motorola.